Azure Durable Functions - Concurrent Processing

Fan-Out pattern VS. Multi-Threading in Activity

If you are developing micro-services utilizing the Microsoft Azure Function infrastructure, then you are likely aware of a few of the constraints the architecture imposes upon using durable functions (aka orchestrations).

- 10 Minute maximum run time of a function*

- Must be deterministic (so that it can be replayed and produce the same result)

- Single-threaded behavior

- I/O (input/output) operations are not allowed.

- Identity management is restricted to Azure Active Directory tokens (Azure AD)

These constraints help answer the complexities of running a complex process that requires stateful coordination between components in a serverless environment.

* Azure’s default maximum run time is 5 minutes.

Yet, how is concurrent processing achieved within an orchestration?

Concurrent Processing with Durable Functions

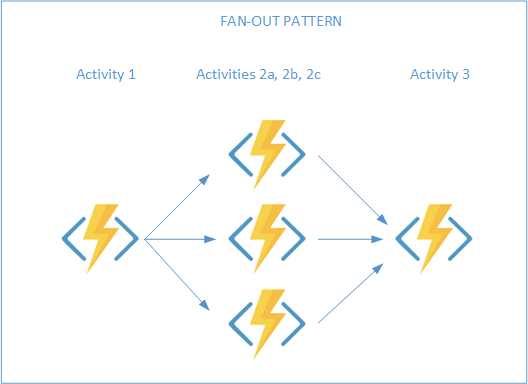

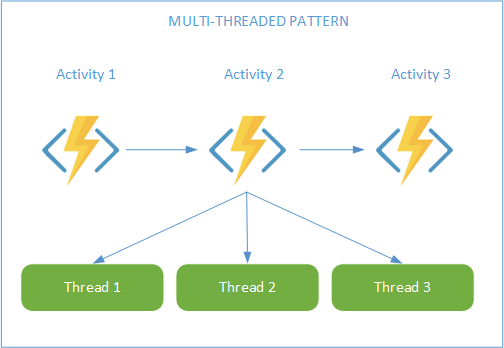

On the topic of single threaded VS multi-threaded, it is possible to parallelize tasks through the use of Activity Functions. One approach is to use a ‘Fan-Out’ pattern to run multiple functions concurrently. A second approach is use multiple threads within an activity.

We looked at both options on our last project.

Fan-Out pattern – Multiple concurrent Activities

Orchestrations do allow activities to be run in parallel using the IDurableOrchestrationContext.CallActivityWithRetryAsync method for each activity to be run in parallel, storing the task into a list of tasks and ‘await’-ing on Any or All the tasks to complete.

Code example:

var dataLoadTasks = new List>();

foreach (var blobName in blobNames)

{

dataLoadTasks.Add(

context.CallActivityWithRetryAsync(

nameof(ActivityLoadFile),

retryOptions,

blobName));

}

}

await Task.WhenAll(dataLoadTasks).ConfigureAwait(true);

Limiting the number of tasks that run in parallel

One thing to be cautious of is the number of resources that may get consumed with launching multiple parallel tasks. You may need to limit the number of threads that get launched at once or increase your Azure resources.

To limit the number of parallel tasks, employ a throttle to the number of tasks that are running at one time.

Code Example:

var dataLoadTasks = new List>();

var numberofTasksToRunInParallel = 2;

foreach (var blobName in blobNames)

{

// Fan out to a configured threshold

var runningTasks = dataLoadTasks.Where(t => !t.IsCompleted);

if (runningTasks.Count() >= numberofTasksToRunInParallel)

{

await Task.WhenAny(runningTasks).ConfigureAwait(true);

}

dataLoadTasks.Add(

context.CallActivityWithRetryAsync(

nameof(ActivityLoadFile),

retryOptions,

blobName));

}

await Task.WhenAll(dataLoadTasks).ConfigureAwait(true);

NOTE: The number of tasks to run in parallel can be set using an app config value instead of hard-coded. This way it could be changed through the Azure Portal instead of re-deploying the function.

Pros:

- Azure has a 10-minute maximum time an activity can run. This approach provides each activity up to 10 minutes to run. In this case the overall processing can span a longer time frame.

Cons:

- This approach was respectively slower to process the same set of files. Likely because starting an activity has significant overhead.

- Orchestrator code was more cluttered. To help keep the code organized, the processing that was to be run in parallel was put into a local method in the orchestration class.

Multi-threading in an Activity

As an alternative to spinning up parallel activities, an activity could employ the use of threading to accomplish the same goal. In this approach, a similar technique in the first example can used to execute a process in parallel.

Code Example

var fileLoadTasks = new List>();

var numberofTasksToRunInParallel = 2;

foreach (var blobName in blobNames)

{

var runningTasks = fileLoadTasks.Where(t => !t.IsCompleted);

if (runningTasks.Count() >= numberofTasksToRunInParallel)

{

await Task.WhenAny(runningTasks).ConfigureAwait(true);

}

fileLoadTasks.Add(this.LoadFile(blobName));

}

// Wait for remaining tasks to finish

await Task.WhenAll(fileLoadTasks).ConfigureAwait(true);

In the example above, the LoadFile routine is a private method within the activity.

Throttling the number of tasks that are run concurrently is shown above through the same technique as used in the Fan-Out example. Again, the value can be set through an app config setting instead of being hard-coded.

Pros:

- It was respectively faster than the Fan-out pattern, likely because starting an activity has significant overhead.

- Orchestrator was cleaner. The code that is necessary to manage launching and throttling the activities was moved to the activity.

- More encapsulated. The orchestration doesn’t need to ‘know’ how the processes get run; it only needs to know the result status of the processing.

Cons:

- Azure has a 10-minute maximum time an activity can run. With this approach, all the processing is within an activity and therefore, all parallel processing must be completed within the same 10 minutes.

Which approach is better?

This is a great question and the answer will likely depend on your scenario.

- Does the overall processing need to go beyond 10 minutes, even with parallelizing the tasks?

- Does your budget allow you to use a premium plan where the 10 minute restriction is removed?

We have used both techniques for different purposes.

Fan-Out Use

We employed the Fan-Out architecture for a use-case where we needed to pull data from a third party system based on a date range. The amount of data to be retrieved was variable from one day to the next, and respectively too large to pull all in one request. We used the Fan-Out architecture to request the data in hourly blocks concurrently to split the work up among multiple activities.

Multi-Threaded Use

In a file load use case, we found that parallelizing the functionality in the Activity through multi-threading worked better. We had a couple hundred files to load from a single source. Our first attempt was to use the Fan-Out pattern. This worked yet, seemed to take longer than expected. We then prototyped the the multi-threaded approach and found that it took 25% of time to load all the files compared to the Fan-Out.

Unexpected benefit of Mult-threaded approach

Another unexpected benefit also resulted in using the multi-threaded approach with file loading... Reduced SEH Exceptions when debugging our function app in Visual Studio.

What is an SEH exception?

An SEH Exception occurs when an external component throws an exception. From Microsoft, SEHExceptions are generated when unmanaged code throws an exception that hasn't been mapped to another .NET Framework exception.

When debugging our solution through Visual Studio, these intermittently occurred when accessing azure blobs in azure blob storage. Sometimes so often that we couldn't step through the code. After switching to the multi-threaded approach, the SEHException would still happen, yet very infrequently.

NOTE: We did attempt to isolate what specifically was causing it with out success. The issue seems to point to an issue between the blob storage libraries and Visual Studio. These exceptions only occurred when debugging the code; they never occurred if we ran with out debugging.

8348